IDC (inverse-difficulty credit) is a points scale for exams where hard questions are worth fewer points. This gives good grade distributions on exams using mastery grading, where questions are only graded as correct or incorrect.

Why do we need IDC?

In the U.S., students are used to the “10-point” grading scale, where 90-100 points gets you an “A”, 80-90 gets you a “B”, etc. This means that we normally want our exam averages to be somewhere around 75 to 80 points out of 100, and we want almost no students to be below the cutoff of 60 for an “F” grade. Said another way, we want the grade distribution to be highly skewed towards the upper end of the points scale, but we still want to differentiate between the stronger students.

This can be a particular problem on exams with relatively few questions (less than 20) that use mastery grading, where each question is only graded as correct (full points) or incorrect (zero points). This grading scheme is very common with computer-based tests, where it’s hard to give meaningful partial credit. There are various complicated solutions, such as Computerized Adaptive Testing (CAT), but these require accurate knowledge of question difficulty before the exam or post-exam score changes.

A simple fix: IDC

A straightforward solution is Inverse Difficulty Credit (IDC). In this system, we give questions over a range of difficulties and make them worth points inverse to their difficulty. That is, easy questions are worth more, and hard questions are worth less. This means that almost all students can rapidly get about 50 points, providing a reasonable baseline score, and then the remaining points determine who gets which grade. The hardest questions (worth the least points) differentiate between the very strongest students.

Example exam using IDC

I find it helpful to break up questions into difficulty groups, such as:

| 3 easy questions | 20 points each | 60 points total |

| 2 medium questions | 15 points each | 30 points total |

| 1 hard question | 10 points | 10 points total |

Under this scheme, a very weak student would get 60 points (easy questions only), a medium student would get 75 to 90 (easy and some medium questions) and only the strongest students would get 100.

To put numbers on what we mean by easy/medium/hard questions and calculate an average exam score, we could consider numbers like:

| 3 easy questions | 100% average, 20 points each | 60 points average |

| 2 medium questions | 60% average, 15 points each | 18 points average |

| 1 hard question | 20% average, 10 points | 2 points average |

| Total: | 80 points average |

More important than just the average score is what happens to the grade distribution. That is, what do weak/medium/strong students get? To get a sense of this we can consider different cases:

| Weakest student | Only easy questions correct | 60 points | D/F grade |

| Medium student | Easy and medium correct | 75-90 points | B/C grade |

| Strongest student | All questions correct | 100 points | A grade |

In the table above we see that even the weakest students get a score around 60, so no-one is walking out the of exam devastated by a zero score. Most students are in the B/C range, and we have good differentiation for the strongest students, as it’s quite hard to score over 90 and get an A grade.

Extra safety nets

One small risk with IDC scoring is that a student might get one of the easy questions wrong and miss out on 20 points in one go. To reduce the chance of this happening, some options are:

- Give multiple attempts at easy questions with a generous score curve. For example, in PrairieLearn we could use a points list of [20, 19, 18, 17, 16] so that students can have five attempts at each question, only losing one point each time they re-attempt an answer.

- We could also use “best 3 of 4” scoring by giving four easy questions each worth 20 points, but only counting the highest three scores from them. This can be implemented with the

bestQuestionsoption in PrairieLearn. - Alternatively, we could use a “points cap” for the easy questions. Here we would give four easy questions each worth 20 points, but cap the total points available for the easy questions group to 60 points. This can be done with the

maxPointsoption for a zone in PrairieLearn.

Real-world data

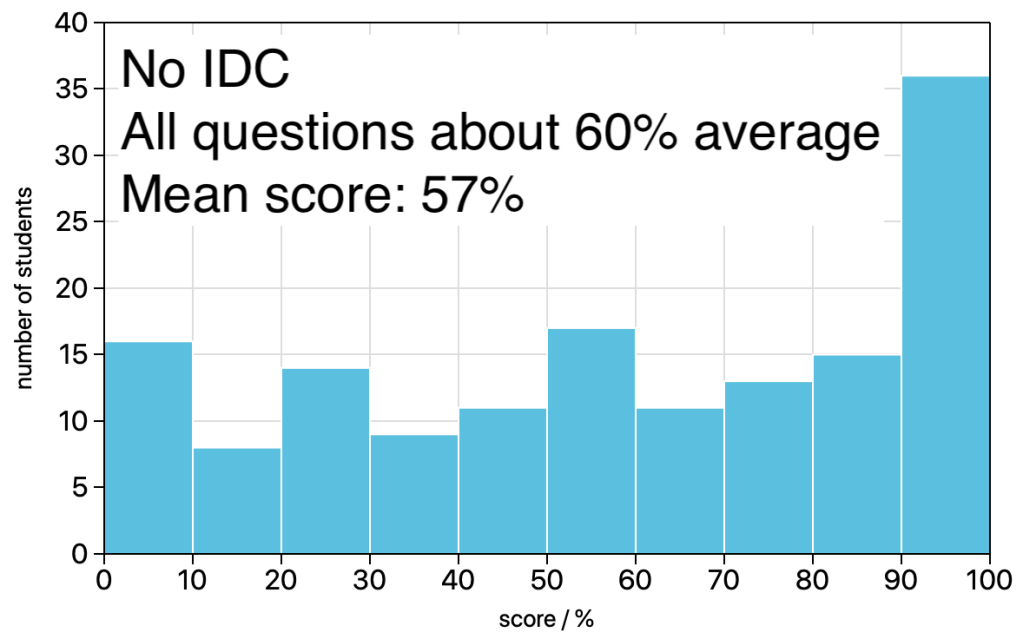

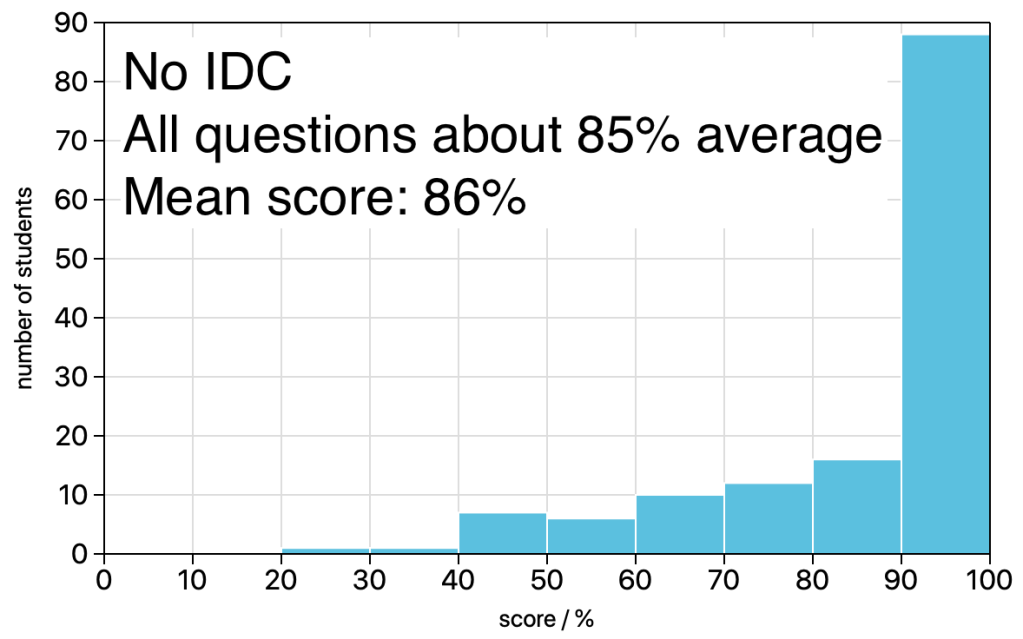

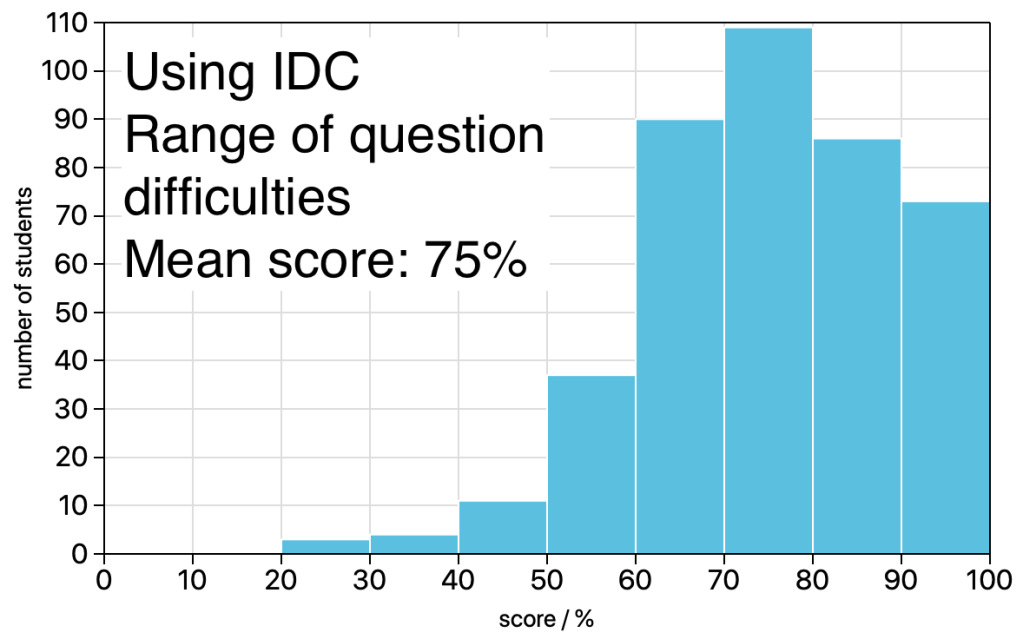

I pulled some real exam score distributions from TAM 2XX courses (the introductory mechanics sequence) at Illinois in the 2019/2020 academic year to see how different scoring systems play out in practice.

First is a non-IDC exam with five questions, all of about equal difficulty with an average correct rate of approximately 60%. This resulted in strong students answering everything correctly and getting 100, while weak and medium students often scored very low and many “failed the exam” in the sense of getting below 50 or 60 points. In particular, the weakest students couldn’t do any of the questions and got zero, which is psychologically hard to take:

Second, an immediate fix for the “hard exam” above is to make all the questions easier. Below we have a non-IDC exam with four questions, all with an average correct rate of approximately 85%. This succeeded in pushing the average exam score higher, but now almost all students were piled up near 100. Despite this super-easy exam, there are still students scoring below 50 because they struggled to do any of the questions:

Third, we have an IDC exam with six questions covering a range of difficulties. The easiest questions were worth 20 points and had average correct rates of about 95%, while the hardest were worth 10 points and had correct rates of about 30%. This resulted in a low-but-reasonable mean exam score of 75. Despite this somewhat low mean, relatively few students “failed” the exam and no student scored zero. In addition, there was no “pile-up” of scores at 100, as the hardest questions provided good differentiation at the top end:

Finally, I should emphasize that much of the benefit in the IDC exam above is from using a range of question difficulties, rather than making all questions similarly hard. IDC reinforces this difficulty spread to compress the score distribution into the 60+ range while still maintaining good differentiation above 90.